the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Potential artifacts in conservation laws and invariants inferred from sequential state estimation

Carl Wunsch

Patrick Heimbach

In sequential estimation methods often used in oceanic and general climate calculations of the state and of forecasts, observations act mathematically and statistically as source or sink terms in conservation equations for heat, salt, mass, and momentum. These artificial terms obscure the inference of the system's variability or secular changes. Furthermore, for the purposes of calculating changes in important functions of state variables such as total mass and energy or volumetric current transports, results of both filter and smoother-based estimates are sensitive to misrepresentation of a large variety of parameters, including initial conditions, prior uncertainty covariances, and systematic and random errors in observations. Here, toy models of a coupled mass–spring oscillator system and of a barotropic Rossby wave system are used to demonstrate many of the issues that arise from such misrepresentations. Results from Kalman filter estimates and those from finite interval smoothing are analyzed. In the filter (and prediction) problem, entry of data leads to violation of conservation and other invariant rules. A finite interval smoothing method restores the conservation rules, but uncertainties in all such estimation results remain. Convincing trend and other time-dependent determinations in “reanalysis-like” estimates require a full understanding of models, observations, and underlying error structures. Application of smoother-type methods that are designed for optimal reconstruction purposes alleviate some of the issues.

- Article

(7985 KB) - Full-text XML

- BibTeX

- EndNote

Intense scientific and practical interest exists in understanding the time-dependent behavior in the past and future of elements of the climate system, especially those represented in a reanalysis. Expert practitioners of the methodology of reanalysis, particularly on the atmospheric side (e.g., Dee, 2005; Cohn, 2010; Janjić et al., 2014; and Gelaro et al., 2017), clearly understand the pitfalls of the methodologies, but many of these discussions are couched in the mathematical language of continuous space–time (requiring the full apparatus of functional analysis) and/or the specialized language of atmospheric sciences. Somewhat controversial, contradictory results in the public domain (e.g., Hu et al., 2020 or Boers, 2021) suggest that, given the technical complexities of a full reanalysis computation, some simple examples of the known difficulties with sequential analysis methods could be helpful. For scientists interested in the results but not fully familiar with the machinery being used, it is useful to have a more schematic, simplified set of examples so that the numerous assumptions underlying reanalyses and related calculations can be fully understood. Dee (2005) is close in spirit to what is attempted here. As in geophysical fluid dynamics methods, two “toy models” are used to gain insight into issues applying to far more realistic systems.

Discussion of even the simplified systems considered below requires much notation. Although the Appendix writes out the fuller notation and its applications, the basic terminology used is defined more compactly here. Best estimates of past, present, and future invoke knowledge of both observations and models, and both involve physical–dynamical, chemical, and biological elements.

To understand and interpret the behavior of physical systems one must examine long-term changes in quantities that are subject to system invariants or conservation rules, e.g., energy, enstrophy, total mass, or tracer budgets. Conservation rules in physical systems imply that any changes in the quantity are attributable to identifiable sources, sinks, or dissipation in the interior as well as in the boundary conditions and that they are represented as such in the governing equations. In the absence of that connection in, e.g., mass or energy conservation, claims of physical understanding must be viewed with suspicion. In climate science particularly, violations undermine the ability to determine system trends in physical quantities such as temperature or mass, as well as domain-integrated diagnostics (integrated heat and mass content) over months, decades, or longer.

Two major reservoirs of understanding of systems such as those governing the ocean or climate overall lie with observations of the system and with the equations of motion (e.g., Navier–Stokes) believed applicable. Appropriate combination of the information from both reservoirs generally leads to improvement over estimates made from either alone but should never degrade them. Conventional methods for combining data with models fall into the general category of control theory in both mathematical and engineering forms, although full understanding is made difficult in practice by the need to combine major sub-elements of different disciplines, including statistics of several types, computer science, numerical approximations, oceanography, meteorology, climate, dynamical systems theory, and the observational characteristics of very diverse instrument types and distributions. Within the control theory context, distinct goals include “filtering” (what is the present system state?), “prediction” (what is the best estimate of the future state?), and “interval smoothing” (what was the time history over some finite past interval?) and their corresponding uncertainties.

In oceanography, as well as climate physics and chemistry more generally, a central tool has become what meteorologists call a “reanalysis,” – a time-sequential estimation method ultimately based on long experience with numerical weather prediction. Particular attention is called, however, to Bengtsson et al. (2004), who showed the impacts of observational system shifts on apparent climate change outcomes arising in some sequential methods. A number of subsequent papers (see, for example, Bromwich and Fogt, 2004; Bengtsson et al., 2007; Carton and Giese, 2008; and Thorne and Vose, 2010) have noticed difficulties in using reanalyses for long-term climate properties, sometimes ending with advice such as “minimize the errors” (see Wunsch, 2020, for one global discussion).

For some purposes, e.g., short-term weather or other prediction, the failure of the forecasting procedure (consisting of cycles producing analysis increments from data followed by model forecast) to conserve mass, energy, or enstrophy may be of no concern – as the timescale of emergence for detectable consequences of that failure can greatly exceed the forecast time. In contrast, for reconstruction of past states, those consequences can destroy any hope of physical interpretation. In long-duration forecasts with rigorous models, which by definition contain no observational data at all, conservation laws and other invariants of the model are preserved, provided their numerical implementation is accurate. Tests, however, of model elements and in particular of accumulating errors are then not possible until adequate data do appear.

We introduce notation essential for the methods used throughout the paper in Sect. 2. Experiments that examine the impact of data on reconstruction of invariants in a mass–spring oscillator system are discussed in Sect. 3. This section includes the impact of data density and sparsity on reconstructions of energy, position, and velocity and ends with a discussion of the structure of the covariance matrix. Section 4 covers the Rossby wave equation and examines a simplified dynamical system resembling a forced Rossby wave solution. Here a combination of the Kalman filter and the Rauch–Tung–Striebel smoother (both defined fully in Bryson and Ho, 1975 and Wunsch, 2006) is used to reconstruct generalized energy and the time-independent transport of a western boundary current. Results and conclusions are discussed in Sect. 5.

All variables, independent and dependent, are assumed to be discrete. Notation is similar to that in Wunsch (2006). Throughout the paper italic lowercase bold variables are vectors and uppercase bold variables are matrices.

Let x(t) be a state vector for time , where Δt is a discrete time step. A state vector is one that completely describes a physical system evolving according to a model rule (in this case, linear),

where Δt is the constant time step. A(t) is a square “state transition matrix”, and B(t)q(t) is any imposed external forcing, including boundary conditions, with B(t) a matrix distributing disturbances q(t) appropriately over the state vector. Generally speaking, knowledge of x(t) is sought by combining Eq. (1) with a set of linear observations,

Here, E(t) is another known matrix, which typically vanishes for most values t and represents how the observations measure elements of x(t). The variable n(t) is the inevitable noise in any real observation and for which some basic statistics will be assumed. Depending upon the nature of E(t), Eq. (2) can be solved by itself for an estimate of x(t). (As part of the linearization assumption, neither E(t) nor n(t) depends upon the state vector.)

Estimates of the (unknown) true variables x(t) and q(t) are written with tildes: . As borrowed from control theory convention, the minus sign in denotes a prediction of x(t) not using any data at time t but possibly using data from the past. If no data at t are used then . Similarly, the plus sign in indicates an estimate at time t where data future to time t have also been employed. In what follows, the “prediction” model is always of the form in Eq. (1), but usually with deviations in x(0) and in q(t), which must be accounted for.

In any estimation procedure, knowledge of the initial state elements and resulting uncertainties is required. A bracket is used to denote expected value, e.g., the variance matrix of any variable ξ(t) is denoted,

and where a is usually the true value of ξ(t) or some averaged value. (When ξ is omitted in the subscript, P refers to .)

Together, Eqs. (1) and (2) are a set of linear simultaneous equations for x(t) and possibly q(t), which, irrespective of whether overdetermined or underdetermined, can be solved by standard inverse methods of linear algebra. For systems too large for such a calculation and/or ones in which data continue to arrive (e.g., for weather) following a previous calculation, one moves to using sequential methods in time.

Suppose that, starting from t=0, a forecast is made using only the model Eq. (1) until time t, resulting in and a model-alone forecast . Should a measurement y(t+Δt) exist, a weighted average of the difference between y(t+Δt) and its value predicted from provides the “best” estimate, where the relative weighting is by the inverse of their separate uncertainties. In the present case, this best estimate at one time step in the future is given by

As written, this operation is known as the innovation form of the “Kalman filter” (KF), and K(t+Δt) is the “Kalman gain.” Embedded in this form are the matrices and R(t+Δt), which denote the uncertainty of the pure model prediction at time t and the covariance of the observation noise (usually assumed to have zero mean error), respectively. The uncertainties and P(t) evolve with time according to a matrix Riccati equation; see Appendix A, Wunsch (2006), or numerous textbooks such as Stengel (1986) and Goodwin and Sin (1984) for a fuller discussion. Although possibly looking unfamiliar, Eq. (4) is simply a convenient rewriting of the matrix-weighted average of the model forecast at t+Δt with that determined from the data. If no data exist, both y(t+Δt) and E(t+Δt) vanish, and the system reduces to the ordinary model prediction.

Without doing any calculations, some surmises can be made about system behavior from Eq. (4). Among them are the following (a) if the initial condition (with uncertainty P(0)) has errors, the time evolution will propagate initial condition errors forward. Similarly, however obtained, any error in with uncertainty covariance will be propagated into . (b) The importance of the data versus the model evolution depends directly on the ratio of the norms of and R(τ). Lastly (c), and most important for this paper, the data disturbances appear in the time evolution equation (Eq. 4) fully analogous to the external source–sink or boundary condition term. Conservation laws implicit in the model alone will be violated in the time evolution, and ultimately methods to obviate that problem must be found.

Although here the true Kalman filter is used for toy models to predict time series, in large-scale ocean or climate models this is almost never the case in practice. Calculation of the P matrices from Eq. (A1 in the Appendix) is computationally so burdensome that K(t) is replaced by some very approximate or intuitive version, usually constant in time, potentially leading to further major errors beyond what is being discussed here.

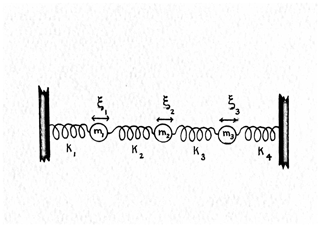

Consider the intuitively accessible system of a mass–spring oscillator, following McCuskey (1959), Goldstein (1980), Wunsch (2006), or Strang (2007), initially in the conventional continuous time formulation of simultaneous differential equations. Three identical masses, m=1, are connected to each other and to a wall at either end by springs of identical constant, k (Fig. 1). Movement is damped by a Rayleigh friction coefficient r. Generalization to differing masses, spring constants, and dissipation coefficients is straightforward. Displacements of each mass are ξi(t), with . The linear Newtonian equations of coupled motion are

This second-order system is reduced to a canonical form of coupled first-order equations by introduction of a continuous time state vector, the column vector,

where the superscript T denotes the transpose. Note the mixture of dimensional units in the elements of xc(t), identifiable with the Hamiltonian variables of position and momentum. Then Eq. (6) becomes (setting m=1 or dividing through by it)

where

defining the time-invariant 3×3 block matrices Kc and Rc, symmetric and diagonal, respectively. The structure of Bc depends on which masses are forced. For example, if only ξ2(t) is forced, then Bc would be the unit vector in the second element.

Assuming , then Ac is full-rank with three pairs of complex conjugate eigenvalues but non-orthonormal right eigenvectors. Ac and Bc are both assumed to be time-independent. Discussion of the physics and mathematics of small oscillations can be found in most classical mechanics textbooks and is omitted here. What follows is left in dimensional form to make the results most intuitively accessible.

3.1 Energy

Now consider an energy principle. Let be the position sub-vector. Define, without dissipation (Rc=0) or forcing,

Here, ℰc is the sum of the kinetic and potential energies (the minus sign compensates for the negative definitions in Kc). The non-diagonal elements of Kc redistribute the potential energy amongst the masses through time.

With finite dissipation and forcing,

If the forcing and dissipation vanish then (see Cohn, 2010, for a formal discussion of such continuous time systems.)

3.2 Discretization

Equation (1) is discretized at time intervals Δt using an Eulerian time step,

for n≥0. The prediction model is unchanged except now

and without the c subscript.

For this choice of the discrete state vector, the energy rate of change is formally analogous to that in the continuous case,

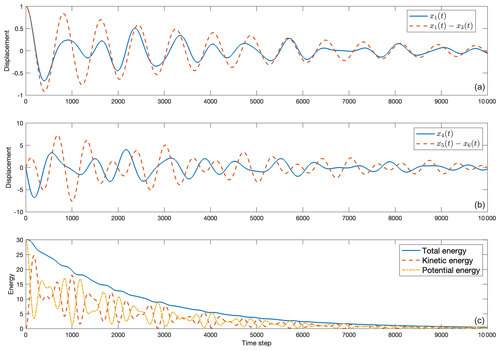

where ℰ(t) is computed as before in Eq. (10) except now using the discretized x(t). An example solution for the nearly dissipationless, unforced oscillator is provided in Fig. 2, produced by the discrete formulation ℰ(t). The potential and kinetic energies through time are shown in Fig. 2c, along with elements and derived quantities of the state vector in Fig. 2a and b. Nonzero values here arise only from the initial condition necessarily specifying both initial positions and their rates of change. A small amount of dissipation (r=0.5) was included to stabilize the particularly simple numerical scheme. The basic oscillatory nature of the state vector elements is plain, and the decay time is also visible.

Figure 2The unforced case with the initial condition vanishing except for ξ1(1)=1. Natural frequency and decay scale are apparent. (a) x1(t) (solid) and x1(t)−x3(3) (dashed). (b) x4 (solid) and x5−x6 (dashed). (c) ℰ(t) showing decay scale from the initial displacement, alongside kinetic energy (dashed) and potential energy (dot-dashed.)

The total energy declines over the entire integration time, but with small oscillations persisting after 5000 time steps. Kinetic energy is oscillatory as energy is exchanged with the potential component.

3.3 Mass–spring oscillator with observations

Note that if the innovation form of the evolution in Eq. (4) is used, the energy change becomes

explicitly showing the influence of the observations. With intermittent observations and/or with changing structures in E(t), then ℰ(t) will undergo forced abrupt changes that are a consequence of the sequential innovation.

Given the very large number of potentially erroneous elements in any choice of model, data, and data distributions, as well as the ways in which they interact when integrated through time, a comprehensive discussion even of the six-element state vector mass–spring oscillator system is difficult. Instead, some simple examples primarily exploring the influence of data density on the state estimate and of its mechanical energy are described. Numerical experiments are readily done with the model and its time constants, model time step, accuracies, and corresponding covariances of initial conditions, boundary conditions, and data. The basic problems of any linear or linearized system already emerge in this simple example.

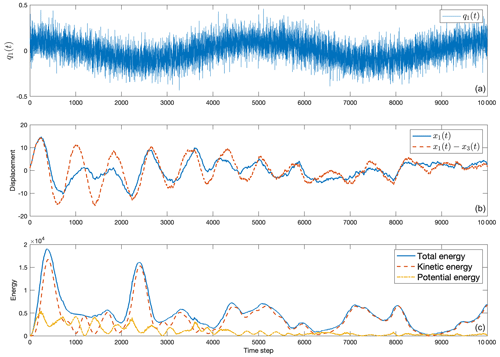

Figure 3Forced version of the same oscillator system as in Fig. 2. Forcing is at every time step in the mass 1 position alone. (a) The forcing, q(t), is given by white noise plus the visible low-frequency cosine curve. (b) x1(t) (solid) and x1(t)−x3(t) (dashed). (c) Total energy through time, ℰ(t), alongside kinetic energy (dashed) and potential energy (dot-dashed). Energy varies with the purely random process ε(t) as well as from the deterministic forcing.

The “true” model assumes the parameters , and Δt=.001 and is forced by

shown in Fig. 3a. That is, only mass one is forced in position, and with a low frequency not equal to one of the natural frequencies. In this case, and q(t)=q1(t), a scalar. The dissipation time is , and ε(t) is a white noise element with standard deviation 0.1. The initial condition is ξ1(0)=1 with all other elements vanishing; see Fig. 3b and c for an example of a forced solution of positions, velocities, and derived quantities. Accumulation of the influence of the stochastic element in the forcing depends directly upon details of the model timescales and, if ε(t) were not white noise, on its spectrum as well. In all cases the cumulative effect of a random forcing will be a random walk – with details dependent upon the forcing structure, as well as on the various model timescales.

The prediction model here has fully known initial conditions and A and B matrices, but the stochastic component of the forcing is being treated as fully unknown, i.e., ε(t)=0 in the prediction. Added white noise in the data has a standard deviation of 0.01 in all calculations.

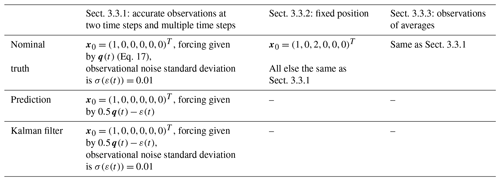

The experiments and their parameters are outlined in Table 1, where “–” is used to indicate that the same conditions as the nominal truth are used.

Table 1Numerical experiments and corresponding parameters. Common to all configurations are the settings k=30, r=0.5, and Δt=0.001, and observational noise is chosen from a Gaussian distribution. The symbol “–” indicates the same conditions as the nominal truth.

3.3.1 Accurate observations: two times and multiple times

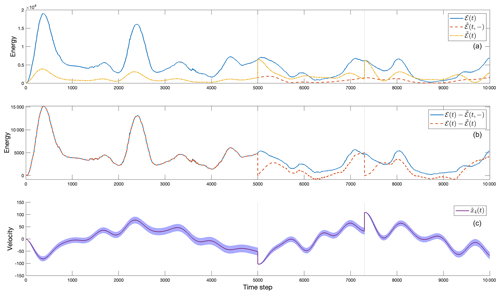

To demonstrate the most basic problem of estimating energy, consider highly accurate observations of all six generalized coordinates (i.e., positions and velocity) at two times τ1 and τ2 as displayed in Fig. 4 with E=I6, i.e., having no observational null space. The forecast model has the correct initial conditions of the true state but incorrect forcing: the deterministic component has half the amplitude of the true forcing and ε(t) is completely unknown. Noise with standard deviation 0.01 is added to the observations. Although the new estimate of the KF reconstructed state vector is an improvement over that from the pure forecast, any effort to calculate a true trend in the energy of the system, , will fail unless careful attention is paid to correcting for the conservation violations at the times of the observation.

Until the first data point (denoted with a vertical line in Fig. 4 in all three panels) is introduced the KF and prediction energies are identical, as expected. Energy discontinuities occur at each introduction of a data point (t=5000 Δt and t=7300 Δt), seen as the vertical jumps in Fig. 4a. After the first data point the KF energy tends to remain lower than the true energy, but the KF prediction is nonetheless an improvement over that from the prediction model alone. This change is shown in Fig. 4b, where the differences between true and predicted energies are plotted alongside the differences of the energy of the true and KF estimate. Even if the observations are made perfect ones (not shown), this bias error in the energy persists (see, e.g., Dee, 2005). Figure 4c offers insight into the KF prediction via the covariance matrix P(t). The uncertainty in the predicted displacement is small once new data are inserted via the analysis increment, but it quickly grows as the model is integrated beyond the time of analysis.

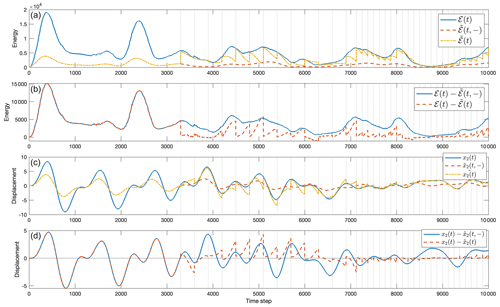

Figure 5 shows the results when observations occur in clusters having different intervals between the measurements, the first being sparser observations (300 time steps between data points) and the second being denser observations (125 time steps between data points). Visually, the displacement and energy have a periodicity imposed by the observation time intervals and readily confirmed by Fourier analysis. Again, the KF solution is the pure model prediction until data are available, at which point multiple discontinuities occur, one for every t where data are introduced.

A great many further specific calculations can provide insight as is apparent in the above examples and as inferred from the innovation equation, Eq. (4). For example, the periodic appearance of observations introduces periodicities in and hence in properties such as the energy derived from it. Persistence of the information in these observations at future times will depend upon model time constants including dissipation rates.

Figure 4(a) Total energy for the three-mass–spring oscillator system (solid), ℰ(t), the prediction model (dashed), , and the KF reconstruction (dot-dashed), . (b) The difference between the truth and prediction total

energies (solid) and the difference between the truth and KF total energies

(dashed). Data are introduced at times indicated by the vertical lines and create discontinuities, forcing to be near zero. Note that the differences do not

perfectly match at time t=0, but they are relatively small.

(c) Estimated velocity of the first mass ( from the Kalman filter, showing

the jump at the two times where there are complete near-perfect data.

The standard error bar is shown from the corresponding diagonal element of P(t), in this case given by .

Figure 5(a) Similar to Fig. 4, but showing the influence of clusters of observations. Shown are true total energy (solid), ℰ(t), plotted alongside the prediction model energy (dashed), , and calculated from the KF algorithm (dot-dashed), . (b) The difference between the “truth” and prediction (solid) alongside the difference between the truth and KF (dashed). (c) The position of mass 2, x2(t), given by the “true” model (solid), the prediction (dashed), and the KF estimate (dot-dashed). The introduction of data points forces the KF to match the state vector to observations within error estimates, creating the discontinuities expected. (d) (solid) alongside (dashed).

3.3.2 A fixed position

Exploration of the dependencies of energies of the mass–spring system is interesting and a great deal more can be said. Turn, however, to a somewhat different invariant: suppose that one of the mass positions is fixed, but with the true displacement unknown to the analyst. Significant literature exists devoted to finding changes in scalar quantities such as global mean atmospheric temperatures or oceanic currents, with the Atlantic Meridional Overturning Circulation (AMOC) being a favorite focus. These quantities are typically sub-elements of complicated models involving very large state vectors.

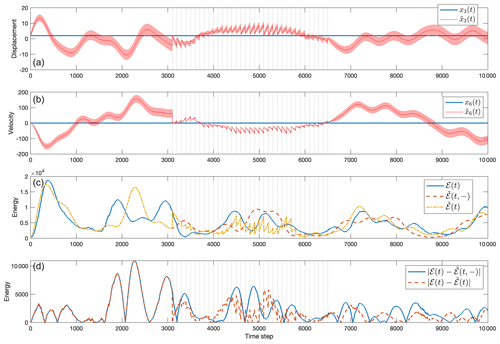

The true model is now adjusted to include the constraint that and thus . That is, a fixed displacement in mass 3 (and consequently a zero velocity in mass 3) is used in computing the true state vector. The forecast model has the correct initial condition and incorrect forcing: with a deterministic component again having half the true amplitude and fully unknown noise ε(t). Observations are assumed to be those of all displacements and velocities, with the added noise having standard deviation 0.01. Results are shown in Fig. 6. The question is whether one can accurately infer that ξ3(t) is a constant through time. A KF estimate for the fixed position, , is shown in Fig. 6a and includes a substantial error in its value (and its variations or trends) at all times. Exceptions occur when data are introduced at the vertical lines in Fig. 6a and b. Owing to the noise in the observations, the KF cannot reproduce a perfect result.

Figure 6(a) Correct value of the constant displacement x3(t) (solid) and the estimated value from the KF calculation (dot-dashed) with error bar computed from P. Vertical lines are again the observation times. (b) Correct value of the constant velocity x6(t) (solid) and the estimated value from the KF calculation (dot-dashed) with the error bar computed from P. (c) The total energy given by the true model (solid), the prediction value (dashed), and the KF estimate (dot-dashed). (d) The absolute value of the difference between the truth and prediction (solid), as well as the absolute value of the difference between the truth and the KF value (dashed).

Looking at Fig. 6a, one sees that variations in the position of mass 3 occur even during the data-dense period. The variations arise from both the entry of the data and the noise in the observations. An average taken over the two halves of the observation interval might lead to the erroneous conclusion that a decrease has taken place. Such an incorrect inference can be precluded by appropriate use of computed uncertainties. Also note the impact of the KF on the energy (Fig. 6c, d), producing artificial changes as in the previous experiment.

3.3.3 Observations of averages

Now consider a set of observations of the average of the position of masses 2 and 3, as well as of the average velocity of masses 1 and 2, mimicking the types of observations that might be available in a realistic setting. Again for optimistic simplicity, the observations are relatively accurate (including noise with standard deviation 0.01) and occur in the two different sets of periodic time intervals. The first interval has observations every 300 time steps and the second every 125 time steps. Prediction begins with the correct initial conditions, and again the forcing has half the correct amplitude with fully unknown random forcing. Figure 7 displays the results. Position estimates shown in Fig. 7c are good, but not perfect, as is also true for the total energy seen in Fig. 7a. The energy estimate carries oscillatory power with the periodicity of the oncoming observation intervals and appears in the spectral estimate (not shown) with excess energy in the oscillatory band and energy that is somewhat too low at the longest periods. Irregular observation spacing would generate a more complicated spectrum in the result.

Figure 7(a) Results for total energy when observations were of the average of the two positions x2(t) and x3(t) and the two velocities x4(t) and x5(t) at the times marked by vertical lines. (b) Total energy differences corresponding to the situation in (a). (c) Results for the displacement x2(t) estimate when observations were of averages along with error bar from P. (d) Differences between true and predicted (solid) and between true and KF (dashed).

A general discussion of null spaces involves that of the column-weighted appearing in the Kalman gain. If E is the identity (i.e., observations of all positions and velocities) and R(τ) has a sufficiently small norm, all elements of x(τ) are resolved. In the present case, with E having two rows corresponding to observations of the averages of two mass positions and of two velocity positions, the resolution analysis is more structured than the identity, with

A singular value decomposition produces two nonzero singular values, where U2 denotes the first two columns of the matrix. At rank 2, the resolution matrices, TU and TV, based on the U and V vectors, respectively, and the standard solution covariances are easily computed (Wunsch, 2006). A distributes information about the partially determined xi throughout all masses via the dynamical connections as contained in P(τ). Bias errors require specific, separate analysis.

The impact of an observation on future estimated values tends to decay in time, dependent upon the model timescales. Insight into the future influence of an observation can be obtained from the Green function discussion in the Appendix.

3.4 Uncertainties

In a linear system, a Gaussian assumption for the dependent variables is commonly appropriate. Here the quadratic-dependent energy variables become χ2-distributed. Thus, and have such distributions, but with differing means and variances and with potentially very strong correlations, so they cannot be regarded as independent variables. Determining the uncertainties of the six covarying elements making up involves some intricacy. A formal analysis can be made of the resulting probability distribution for the sum in , involving non-central χ2 distributions (Imhof, 1961; Sheil and O'Muircheartaigh, 1977; Davies, 1980). As an example, an estimate of the uncertainty could be made via a Monte Carlo approach by generating N different versions of the observations, differing in the particular choice of noise value in each and tabulating the resulting range. These uncertainties can be used to calculate, e.g., the formal significance of any apparent trend in . Implicit in such calculations is having adequate knowledge of the probability distribution from which the random variables are obtained. An important caveat is that once again bias errors such as those seen in the energy estimates in Fig. 7a must be separately determined.

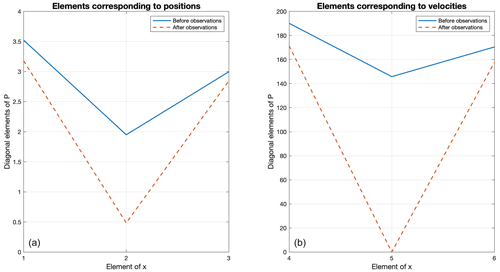

The structure of the uncertainty matrix P depends upon both the model and the detailed nature of the observations (see Appendix A; Eq. A2). Suppose observations only provide knowledge of the velocity of mass 2, x5(t). Consider P(t=7124), just before observations become available (i.e., the model has mimicked a true prediction until this point), and P(t=8250), after 10 observations of x5 have been incorporated with the Kalman filter. The resulting P(t) following the observations produces highly inhomogeneous variances (the diagonals of P). In this particular case, one of the eigenvalues of P(τ) for τ just beyond the time of any observation is almost zero, meaning that P(τ) is nearly singular (Fig. 8). The corresponding eigenvector has a value near 1 in position 5 and is near zero elsewhere. Because numerous accurate observations were made of x5(t), its uncertainty almost vanishes for that element, and a weighting of values by P(t)−1 gives it a nearly infinite weight at that time.

3.5 A fixed interval smoother

The Kalman filter and various approximations to it produce an estimate at any time, τ, taking account of data only at τ or in the past – with an influence falling as the data recede into the past at a rate dependent on the model timescales. But in many problems, such as those addressed (for one example) by the Estimating the Circulation and Climate of the Ocean (ECCO) project (Stammer et al., 2002; Wunsch and Heimbach, 2007), the goal is to find a best estimate over a finite interval, nominally, , and accounting for all of the observations, whether past or future to any τ. Furthermore, as already noted above, physical sense requires satisfaction of the generalized (to account for sources and sinks) energy, mass, and other important conservation rules. How can that be done?

In distinction to the filtering goal underlying a KF best prediction, the fixed interval problem is generally known as that of smoothing. Several approaches exist. One of the most interesting, and one leading to the ability to parse data versus model structure impact over the whole interval, is called the Rauch–Tung–Striebel (RTS) smoothing algorithm. In that algorithm, it is assumed that a true KF has already been run over the full time interval and that the resulting P(t), , and remain available. The basic idea is subsumed in the algorithm

with

This new estimate, using data future to t, depends upon a weighted average of the previous best estimate with its difference between the original pure prediction, , and the improvement (if any) made of the later estimate at t+Δt. The latter uses any data that occurred after that time. Thus a backwards-in-time recursion of Eq. (19) is done – starting from the best estimate at the final KF time, t=tf, beyond which no future data occur. The RTS coefficient matrix, L(t+Δt), has a particular structure accounting for the correlation between and generated by the KF. Equation (A5) calculates the new uncertainty, .

In this algorithm a correction is also necessarily made to the initial assumptions concerning q(t), producing a new set of vector forcings, , such that the new estimate, , exactly satisfies Eq. (1) with at all times over the interval. If the true model satisfies energy conservation, so will the new estimate. That the smoothed estimate satisfies the free-running but adjusted model parameters and thus all of its implied conservation laws is demonstrated by Bryson and Ho (1975) in Chap. 13. Estimated , often called the “control vector”, has its own computable uncertainty found from Eq. (A5b). In many problems, improved knowledge of the forcing field and/or boundary conditions may be equally as or more important than improvement of the state vector. Application of the RTS algorithm is made in the following section to a slightly more geophysical example.

Consider the smoothing problem in a geophysical fluid dynamics toy model. Realism is still not the goal, which remains as the demonstration of various elements making up estimates in simplified settings.

Figure 8From left to right: (a) the first three diagonal elements of P before any observations of x5 (solid) and after 10 observations of x5 (dashed). (b) The last three diagonal elements of P before any observations (solid) and after 10 observations (dashed.)

4.1 Rossby Wave normal modes

A flat-bottom, linearized β-plane Rossby wave system has a two-dimensional governing equation for the streamfunction, ψ,

in a square β-plane basin of horizontal dimension L. The parameter is the variation of the Coriolis parameter, f, with the latitude coordinate, y. This problem is representative of those involving both space and time structures, including boundary conditions (spatial variables x,y should not be confused with the state vector or data variables). Equation (21) and other geophysically important ones are not self-adjoint, and the general discussion of quadratic invariants inevitably leads to adjoint operators (see Morse and Feshbach, 1953, or for bounding problems, see Sewell et al. (1987), Chap. 3, 4).

The closed-basin problem was considered by Longuet-Higgins (1964). Pedlosky (1965) and LaCasce (2002) provide helpful discussions of normal modes. Relevant real observational data are discussed by Luther (1982), Woodworth et al. (1995), Ponte (1997), Thomson and Fine (2021), and others. The domain here is and with the boundary condition ψ=0 on all four boundaries.

Introduce non-dimensional primed variables , , , and . Letting a be the radius of the Earth and , Eq. (21) is non-dimensionalized as

Further choosing L=a (since a is of the same order as the width of the Pacific) and then omitting the primes from here on except for β′,

Haier et al. (2006) describe numerical solution methods that specifically conserve invariants, but these methods are not used here. Gaspar and Wunsch (1989) employed this system for a demonstration of sequential estimation with altimetric data. Here a different state vector is used.

An analytical solution to Eq. (23) is

along with the dispersion relation,

where cnm is a coefficient dependent only upon initial conditions in the unforced case.

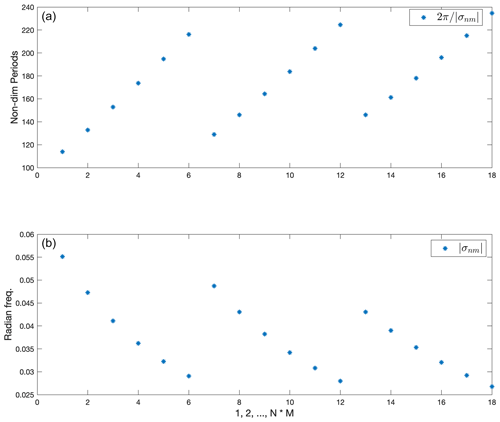

Figure 9(a) Non-dimensional periods grouped by fixed n and increasing m. (b) Inverse of the periods in (a), giving us the frequencies.

A state vector is then

where p is a linear ordering of n and m. Total dimension is then N⋅M, with N and M the upper limits in Eq. (24). State transition can be written in the now familiar form

For numerical examples with the KF and RTS smoother, a random forcing qj(t) is introduced at every step so that

Note that with the introduction of a forcing the solution described by coefficients cp does not strictly satisfy Eq. (23) but is rather a simplified version of a forced solution. Discussion of this dynamical system is still useful for understanding the difficulties that arise in numerical data assimilation. An example of a true forced solution to Eq. (21) is examined in Pedlosky (1965).

The problem is now made a bit more interesting by addition of a steady component to ψ: namely, the solution ψs(x,y) from Stommel (1948), whose governing equation is

where Ra is a Rayleigh friction coefficient.

An approximate solution, written in the simple boundary layer interior form is (e.g., Pedlosky, 1965)

which leads to a small error in the eastern boundary condition (numerical calculations that follow used the full Stommel, 1948, solution). The sin πy arises from Stommel's assumed time-independent wind stress curl.

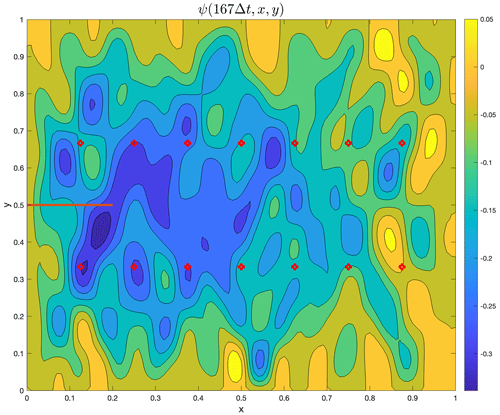

Figure 10Stream function at t=167Δt, with the normal modes superimposed on the time–independent Stommel solution. The Coriolis parameter f is computed at a latitude of 30∘. At later times, the mean flow becomes difficult to visually detect in the presence of the growing normal modes under the forcing. The markers indicate the locations of the observational data, and the horizontal line at y=0.5 is the distance over which the boundary current transport is defined.

The new state vector becomes

now of total dimension .

The state transition matrix A is diagonal with the first N⋅M diagonal elements given by diag(exp (−iσjΔt)) and the final diagonal element the overall square of dimension . A small numerical dissipation is introduced, multiplying A by exp (−b) for b>0, to accommodate loss of memory, e.g., as a conventional Rayleigh dissipation. The constant b is chosen as in the numerical code. The matrix operator B is diagonal with the first N⋅M diagonal elements all equal to 1, and (no forcing is added to the steady solution). Some special care in computing covariances must be taken when using complex state vectors and transition matrices (Schreier and Scharf, 2010).

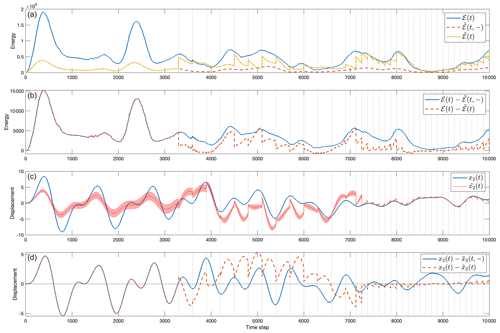

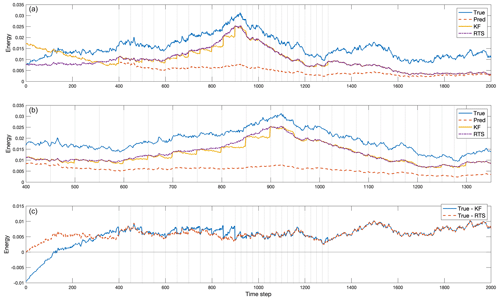

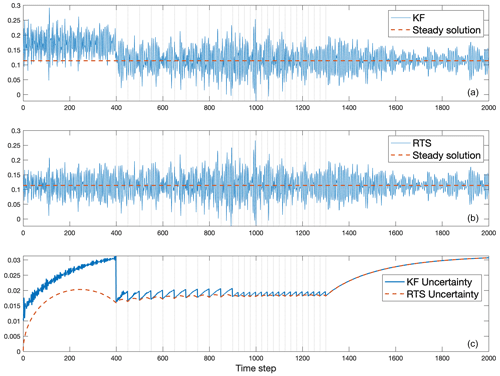

Figure 11(a) The energy in the Rossby wave example, i.e., Φ(t) defined by Eq. (31), computed from the non-dimensional state vector of the true model, prediction, KF, and RTS reconstructions. In the KF the initial conditions are 20 % too large, and the forcing is 50 % too small. Full knowledge of A is assumed. (b) An expanded plot for time steps where observations were incorporated into the result. The RTS smoother produces improved energy, namely one without discontinuities and marginally more accurate than the KF. (c) Difference between the true values and those from the KF (solid line) and the difference between the true values and RTS smoother values (dashed line).

Consistent with the analysis in Pedlosky (1965), no westward intensification exists in the normal modes, which decay as a whole. Rayleigh friction of the time-dependent modes is permitted to be different from that in the time-independent mean flow – a physically acceptable situation.

If q(t)=0 and with no dissipation, then Eq. (23) has several useful conservation invariants including the quadratic invariants of the kinetic energy and of the variance in ψ,

(conjugate transpose), as well as the linear invariant of the vorticity or circulation – when integrated over the entire basin domain. As above, estimates of the quadratic and linear conservation rules will depend explicitly on the initial conditions, forces, distribution, and accuracy of the data, as well as the covariances and bias errors assigned to all of them.

4.1.1 System with observations

Using the KF plus RTS smoother for sequential estimation, estimates of Φ(t) as well as the transport of the western boundary current (WBC), TWBC(t), are calculated; the latter is constant in time, although that is unknown to the analyst. Random noise in TWBC(t) exists from both physical noise – the normal modes – and that of the observations y(tj), as would be the situation in nature.

Equation (1) with the above A and B is used to generate the true fields. Initial conditions, x(0), in the modal components are

Modal periods are shown in Fig. 9. Parameters are fixed as Δt=29, , and f=30, and the random forcing qj has standard deviation 0.002.

The prediction model uses 0.5qp(t) as a first guess for qp(t), where qp(t) represents the true random forcing values, and the initial conditions are too large as 1.5x(0). Noisy observations y(t) are assumed to exist at the positions denoted with red dots in Fig. 10, and the measurement noise has a standard deviation of 0.001.

The field ψ(t=167Δt) as given by the true model is shown in Fig. 10, keeping in mind that apart from the time mean ψ, the structure seen is the result of a particular set of random forcings.

4.2 Aliasing

In isolation, the observations will time-alias the field if not taken at a minimum interval of the shortest period present, here 4Δt. A spatial alias occurs if the separation between observations is larger than one-half of the shortest wavelength present (here . Both these phenomena are present in what follows, but their impact is minimized by the presence of the time evolution model.

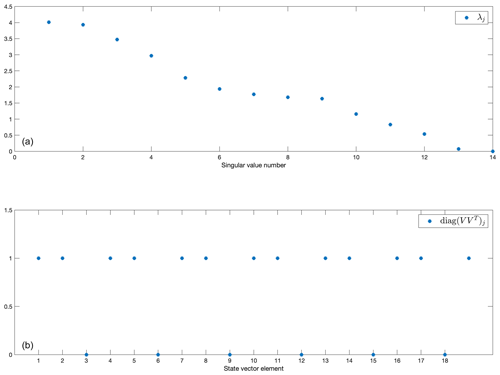

Figure 12(a) Eigenvalues of the first 14 singular vectors of E. The rank is 12 with 14 observational positions. (b) Diagonal elements of the rank-12 solution resolution matrix, showing lack of information for several of the modes. A value of 1 means that the mode is fully resolved by the observations. All variables are non-dimensional.

4.3 Results: Kalman filter and RTS smoother

For the KF and RTS algorithms the model is run for tf=2000 time steps with the above parameters.

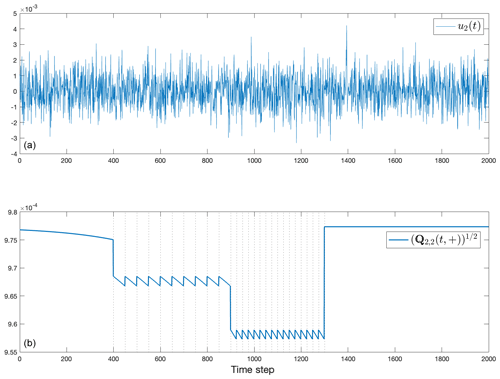

Figure 13(a) One element, u2(t), of the control vector correction estimate and (b) its standard error through time, showing the drop towards zero at the data time and the slow increase towards a higher value when no data are available (we note here that is computed from t=Tf backwards to t=0).

4.3.1 Energy estimates

A KF estimate is computed and the results stored. As in Sect. 3.1.1, observations are introduced in two intervals, each with a different density of observations: initially data are introduced with 50Δt between them and subsequently reduced to 25Δt between observations. Observations cease prior to Tf, mimicking a pure prediction interval following the observations.

The estimated values of the quadratic Φ(t) are shown in Fig. 11 for the true values, KF estimates, RTS smoother estimates, and the pure model prediction. The KF and prediction estimates agree until the first observation time, at which point a clear discontinuity is seen. As additional observations accumulate, ΦKF(t) jumps by varying amounts depending upon the particulars of the observations and their noise. Over the entire observation interval the energy reconstructed by the KF remains low – a systematic error owing to the sparse observations and null space of E. Here the forcing amplitude overall dominates the effects of the incorrect initial conditions. Uncertainty estimates for energy would once again come from summations of correlated χ2 variables of differing means. In the present case, important systematic errors are visible as the offsets between the curves in Fig. 11.

This system can theoretically be overdetermined by letting the number of observations at time t exceed the number of unknowns – should the null space of E(t) then vanish. As expected, with 14 covarying observations and 18 time-varying unknowns xi(t), rank-12 time-independent E(t)=E has a null space, and thus energy in the true field is missed even if the observations are perfect. As is well-known in inverse methods, the smaller eigenvalues of E and their corresponding eigenvectors are most susceptible to noise biases. The solution null space of this particular E(t) is found from the solution eigenvectors of the singular value decomposition, The solution resolution matrix at rank K=12, is shown in Fig. 12. Thus the observations carry no information about modes (as ordered) 3, 6, 9, 12, 15, and 18. In a real situation, if control over positioning of the observations was possible, this result could sensibly be modified and/or a strengthening of the weaker singular values could be achieved. Knowledge of the null space structure is important in the interpretation of results.

A more general discussion of null spaces involves that of the weighted appearing in the Kalman gain (Eq. 5). If is the Cholesky factor of (Wunsch, 2006, page 56), then is the conventional column weighting of E at time τ, and the resolution analysis would be applied to that combination. A diagonal A does not distribute information from any covariance amongst the elements xj(τ) and which would be carried in .

Turning now to the RTS smoother, Fig. 11 shows that the energy in the smoothed solution, ΦRTS(t), is continuous (up to the model time-stepping changes). The only information available to the prediction prior to the observational interval lies in the initial conditions, which were given incorrect values, leading to an initial uncertainty. Estimated unknown elements u(t), the control vector of q(t), in this interval also have a large variance.

One element through time of the estimated control vector and its standard error are shown in Fig. 13. The complex result of the insertion of data is apparent. As with the KF, discussion of any systematic errors has to take place outside of the formalities leading to the smoothed solution. This RTS solution does conserve Φ(t) as well as other properties (circulation).

Figure 14(a) Estimated non-dimensional western boundary current transport from the Kalman filter (solid line) and the steady western boundary current from the Stommel solution (dashed line). (b) Same as (a) except showing the smoothed TWBC from the RTS smoother next to the steady solution. Vertical dashed lines indicate time steps where data were available. (c) Uncertainty in the TWBC predictions over time, computed from the operators P(t) and .

4.3.2 Western boundary current estimates

Consider now determination of , the north–south transport across latitude y0 at each time step, whose true value is constant. TWBC(t) is computed from the velocity or stream function as

the stream-function difference between a longitude pair, . From the boundary condition, . The horizontal line segment in Fig. 10 indicates the location of the zonal section for the experiment at y0=0.5, extending from x=0 to x0=0.2. In the present context, five different values of TWBC(t) are relevant: (a) the true, steady, time-invariant value, computed from the Stommel solution; (b) the true time-dependent value including mode contributions from Eq. (27); (c) the estimated value from the prediction model; (d) the estimate from the KF; and (e) the estimate from the RTS smoother. Figure 14a displays the transport computed from the Stommel solution ψs alongside the transport computed from the KF estimate. Panel (b) in Fig. 14 displays the same steady transport alongside the RTS estimate. Values here are dominated by the variability induced by the normal modes, leading to a random walk. Note that the result can depend sensitively on positions x0 and y0, as well as the particular spatial structure of any given normal mode.

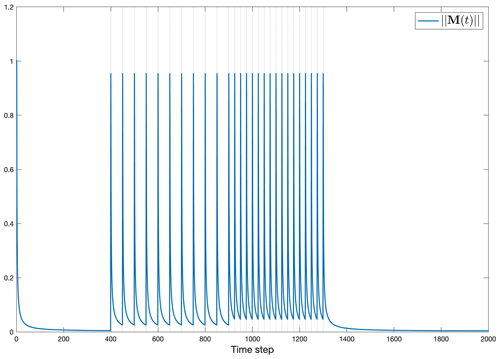

Figure 15(a) Norm of the operator L controlling the backwards-in-time state estimate (see Eq. 20, RTS smoother for the full equation). (b) The real (solid line) and imaginary (dashed line) components of L22(t).

In the KF reconstruction (Fig. 14a), observations move the WBC transport values closer to the steady solution, seen via the jump at t=400, but remain noisy. Transport value uncertainties are derived from the P of the state vector using Eq. (A1) and shown in Fig. 14c. Within the observation interval the estimates are indistinguishable from the true value but still have a wide uncertainty with timescales present from both the natural variability and the regular injection times of the data. The magnitude of the uncertainty during the observation intervals is still roughly 10 % of the magnitude of the KF estimate.

Figure 14b shows the behavior of the estimate of TWBC(t) after the RTS smoother has been applied. Most noticeably, the discontinuity that occurs at the onset of the observations has been removed. A test of the null hypothesis that the transport computed from the RTS smoother was indistinguishable from a steady value is based upon an analysis using the uncertainty (not shown).

The very large uncertainty prior to the onset of data, even with use of a smoothing algorithm, is a central reason that the state estimate produced by the Estimating the Circulation and Climate of the Ocean (ECCO) project (e.g., Stammer et al., 2002; Fukumori et al., 2018) is confined to the interval following 1992 when the data become far denser than before through the advent of ocean-dedicated satellite altimetry and nascent Argo arrays. Estimates prior to a dense data interval depend greatly upon the time durations built into the system, which in the present case are limited by the longest normal mode period. The real ocean does include some very long memory (Wunsch and Heimbach, 2008), but the estimation skill will depend directly on the specific physical variables of concern (ECCO estimates are based upon a different algorithm using iterative least-squares and Lagrange multipliers; see Stammer et al., 2002). For a linear system, results are identical to those using a sequential smoother (see Fukumori et al., 2018).

Some understanding of the impact via the smoother of later observations on KF time estimates can be found from the operator L(t). Figure 15 shows the norm of the operator L (Eq. 20) controlling the correction to earlier state estimates, along with the time dependence of one of its diagonal elements. As always, the temporal structure of L(t) depends directly upon the time constants embedded in A and the compositions of P(t) and . In turn, the latter are determined by any earlier information, including initial conditions, as well as the magnitudes and distributions of later forcing and data accuracies. Generalizations are not easy.

The norm of the gain matrix M(t), used for computation of the control vector in Eq. (A4), provides a measure of its importance relative to the prior estimate and is displayed in Fig. 16. Here the dependence is directly upon the a priori known control variance q(t), the data distributions, and . The limiting cases discussed above for the state vector also provide insights here.

4.3.3 Spectra

Computation of the Fourier spectral estimates of the various estimates of any state vector element or combination is straightforward, and the z transforms in the Appendix provide an analytic approach. What is not so straightforward is the interpretation of the result in this non-statistically stationary system. Care must be taken to account for the nonstationarity.

In sequential estimation methods, the behavior of dynamical system invariants and conservation laws, including energy or circulation or scalar inventories, or derived ones such as a thermocline depth, depend, as shown using toy models, upon a number of parameters. These parameters include the timescales embedded in the dynamical system, the temporal distribution of the data used in the sequential estimation relative to the embedded timescales, and the accuracies of initial conditions, boundary conditions, sources and sinks, and data, as well as the accuracy of the governing time evolution model. Errors in any of these parameters can lead to physically significant errors in estimates of the state and control vectors as well as any quantity derived from them.

Estimates depend directly upon the accuracies of the assumed and calculated uncertainties in all of the elements making up the estimation system. Impacts of data insertions can range from very short time intervals to those extending far into the future. Because of model–data interplay, the only easy generalization is that the user must check the accuracies of all of these elements, including the appearance of systematic errors in any of them (e.g., Dee, 2005), or of periodicities arising solely from data distributions. When feasible, a strong clue to the presence of systematic errors in energies, as one example, lies in determining the null space of the observation matrices coupled with the structure of the state evolution matrices, A. Analogous examples have been computed for advection–diffusion systems (not shown, but see Marchal and Zhao, 2021) with results concerning, in one example, estimates of fixed total tracer inventories.

Use of Kalman filters, and the simple analogs most often used in weather prediction or “reanalyses”, produces results that are always suboptimal when the goal is reconstruction because data future to the estimate have not been used. A second consequence is the failure of the result to satisfy any particular dynamical time evolution model, implying loss of energy, mass, etc. conservation laws. In the Rossby wave example, reconstructions of the constant western boundary current transport improved as expected from that of the KF by using future data and the Rauch–Tung–Striebel (RTS) smoother. Estimates can nonetheless still contain large uncertainties – quantifiable from the accompanying algorithmic equations.

All possible error sources have not been explored. In particular, only linear systems were analyzed, assuming exact knowledge of the state transition matrix, A, and the data distribution matrix, B. Notation was simplified by using only time-independent versions of them. Nonlinear problems arising from errors in A and B will be described elsewhere. Other interesting nonlinear problems include those wherein the observations are derived quantities of the state vector, or the observations – such as a speed – are nonlinear in the state vector.

Figure 16Norm of the gain matrix M through time and which determines the magnitude and persistence of inferred changes in the control variables u(t).

Unless, as in weather prediction, short-term predictions are almost immediately testable by comparison with the observed outcome, physical insights into the system behavior are essential, along with an understanding of the structure of the imputed statistical relationships. As considerable literature cited above has made clear, the inference of trends in properties and understanding of the physics (or biology or chemistry) in the presence of time-evolving observation systems require particular attention. At a minimum, one should test any such system against the behavior of a known result – for example, treating a general circulation model as the “truth” and then running the smoothing algorithms to test whether that truth is forthcoming.

The RTS algorithm is only one choice from several approaches to the finite interval estimation problem. Alternatives include the least-squares and/or Lagrange multiplier approach of the ECCO project, which in the linear case can be demonstrated to produce identical results. This approach guarantees exact dynamical and kinematic consistency of the state estimate (Stammer et al., 2002; Stammer et al., 2016; Wunsch and Heimbach, 2007), a key requirement when seeking physical understanding of the results. Such consistency is ensured by restricting observation-induced updates to those that are formally independent inputs to the conservation laws, i.e., initial, surface, or – where relevant – lateral boundary conditions. This consistency ensures no artificial source or sink terms in the conservation equations. The ECCO project has conducted this approach with considerable success over the past 2 decades and demonstrated the merits of accurate determination of heat, freshwater, and momentum budgets and their constituents (Heimbach et al., 2019). The Lagrange multiplier framework similarly provides a general inverse modeling framework, which addresses several estimation problems either separately or jointly: (i) inference of optimal initial conditions, such as produced by incremental so-called four-dimensional variational data assimilation practiced in numerical weather prediction; (ii) inference of updated (or corrected) boundary conditions, such as practiced in flux inversion methods; (iii) inference of optimal model parameters, such as done in formal parameter calibration problems; or (iv) any combination thereof. More details of this more general framework in the context of similar toy models will be presented in a sequel paper.

A1 Kalman filter

The model state transition equation is that in Eq. (1), and the weighted averaging equation is Eq. (4), with the gain matrix K(t) defined in Eq. (5). Time evolution of the covariance matrix of is governed by

and

with E and R defined in the text. The matrix symbol Γ is introduced for a situation in which the control distribution over the state differs from that in B. Otherwise they are identical. Because P is square of the state vector length, calculating it is normally the major computational burden in the use of a Kalman filter.

Under some circumstances in which a system including observation injection reaches a steady state, the time index may be omitted in both the KF and the RTS smoother. Time independence is commonly assumed when the rigorous formulation for the KF is replaced by an ad hoc constant gain matrix K.

A2 RTS smoother

In addition to Eqs. (19) and (20), the Rauch–Tung–Striebel smoother estimates

for the updated control u(t); q(t) is the assumed covariance of u(t) (the uncertainty in q(t)), and Γ is again often equal to B. Then the corresponding uncertainties of the smoothed estimates are

One can gain insight into this filter–smoother machinery by considering its operation on a scalar state vector with scalar observations (not shown here).

B1 KF response

The impact at other times of having data at time t can lend important physical insight into the sequential analyses. Define an innovation vector,

that is, dδ is a vector of Kronecker deltas representing the difference . Solutions to the innovation Eq. (4) are the columns of the Green function matrix,

K, now fixed in time, is sought as an indication of delta impulse effects of observations on the prediction model at time τ.

Define the scalar complex variable,

where s is the frequency. Then the discrete Fourier transform of Eq. (B7) (the z transform – a matrix polynomial in z) is

The norm of the variable defines the “resolvent” of A in the full complex plane (see Trefethen and Embree, 2005), but here, only is of direct interest: that is, only on the unit circle. The full complex plane carries information about the behavior of A, including stability.

Here and

If a suitably defined norm of A is less than 1,

and the solution matrix in time is the causal vector sequence (no disturbance before t=τ) of columns of

where G can be obtained without the z transform, but the frequency content of these results is of interest.

B2 Green function of smoother innovation

As with the innovation equation for filtering, Eq. (19) introduces a disturbance into the previous estimate, , in which the structure of L(t) determines the magnitude and timescales of observational disturbances propagated backwards in time. It provides direct insight into the extent to which later measurements influence earlier ones. As an example, suppose that the KF has been run to time Tf so that , which is the only measurement. Let the innovation, , be a matrix of δ functions in separate columns,

Then a backwards-in-time matrix Green function is

The various timescales embedded in L depend upon those in , and P(t), as well as with many observations including those of the observation intervals and any structure in the observational noise. Similarly, the control modification will be determined by if q(t)Γ(t)T values are constant in time.

MATLAB codes used here are available directly from Sarah Williamson.

No data sets were used in this article.

CW designed the study, performed initial calculations, and did the initial writing. Calculations were fully redone and updated by SW. PH advised on, provided updates to, and checked accuracies of the paper.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Work by Carl Wunsch was originally done at home during the Trump–Covid apocalypse period. We would like to thank the two anonymous reviewers for providing detailed, helpful comments on the first copy of this paper. Detlef Stammer provided many useful suggestions for an earlier version of the paper.

Supported in part by NASA grant ECCO Connections (via JPL/Caltech sub-awards) and NSF CSSI grant no. 2103942 (DJ4Earth).

This paper was edited by Ismael Hernández-Carrasco and reviewed by two anonymous referees.

Bengtsson, L., Hagemann, S., and Hodges, K. I.: Can climate trends be calculated from reanalysis data?, J. Geophys. Res.-Atmos., 109, https://doi.org/10.1029/2004JD004536, 2004. a

Bengtsson, L., Arkin, P., Berrisford, P., Bougeault, P., Folland, C. K., Gordon, C., Haines, K., Hodges, K. I., Jones, P., Kallberg, P., et al.: The need for a dynamical climate reanalysis, B. Am. Meteorol. Soc., 88, 495–501, 2007. a

Boers, N.: Observation-based early-warning signals for a collapse of the Atlantic Meridional Overturning Circulation, Nat. Clim. Change, 11, 680–688, 2021. a

Bromwich, D. H. and Fogt, R. L.: Strong trends in the skill of the ERA-40 and NCEP–NCAR reanalyses in the high and midlatitudes of the Southern Hemisphere, 1958–2001, J. Climate, 17, 4603–4619, 2004. a

Bryson, A. E. and Ho, Y.-C.: Applied optimal control, revised printing, Hemisphere, New York, https://doi.org/10.1201/9781315137667, 1975. a, b

Carton, J. A. and Giese, B. S.: A reanalysis of ocean climate using Simple Ocean Data Assimilation (SODA), Mon. Weather Rev., 136, 2999–3017, 2008. a

Cohn, S. E.: The principle of energetic consistency in data assimilation, in: Data Assimilation, Springer, 137–216, 2010. a, b

Davies, R. B.: Algorithm AS 155: The distribution of a linear combination of χ 2 random variables, Appl. Stat., 29, 323–333, https://doi.org//10.2307/2346911, 1980. a

Dee, D. P.: Bias and data assimilation, Q. J. Roy. Meteor. Soc., 131, 3323–3343, 2005. a, b, c, d

Fukumori, I., Heimbach, P., Ponte, R. M., and Wunsch, C.: A dynamically consistent, multivariable ocean climatology, B. Am. Meteorol. Soc., 99, 2107–2128, 2018. a, b

Gaspar, P. and Wunsch, C.: Estimates from altimeter data of barotropic Rossby waves in the northwestern Atlantic Ocean, J. Phys. Ocean., 19, 1821–1844, 1989. a

Gelaro, R., McCarty, W., Suárez, M. J., Todling, R., Molod, A., Takacs, L., Randles, C. A., Darmenov, A., Bosilovich, M. G., Reichle, R., et al.: The modern-era retrospective analysis for research and applications, version 2 (MERRA-2), J. Climate, 30, 5419–5454, 2017. a

Goldstein, H.: Classical Mechanics, Addison-Wesley, Reading, Mass, ISBN 9780201657029, 1980. a

Goodwin, G. and Sin, K.: Adaptive Filtering Prediciton and Control, Prentice-Hall, Englewood Cliffs, N.J., ISBN 10 013004069X, 1984. a

Haier, E., Lubich, C., and Wanner, G.: Geometric Numerical integration: structure-preserving algorithms for ordinary differential equations, Springer, ISBN 978-3-540-30663-4, 2006. a

Heimbach, P., Fukumori, I., Hill, C. N., Ponte, R. M., Stammer, D., Wunsch, C., Campin, J.-M., Cornuelle, B., Fenty, I., Forget, G., et al.: Putting it all together: Adding value to the global ocean and climate observing systems with complete self-consistent ocean state and parameter estimates, Front. Mar. Sci., 6, https://doi.org/10.3389/fmars.2019.00055, 2019. a

Hu, S., Sprintall, J., Guan, C., McPhaden, M. J., Wang, F., Hu, D., and Cai, W.: Deep-reaching acceleration of global mean ocean circulation over the past two decades, Sci. Adv., 6, eaax7727, https://doi.org/10.1126/sciadv.aax7727, 2020. a

Imhof, J.-P.: Computing the distribution of quadratic forms in normal variables, Biometrika, 48, 419–426, 1961. a

Janjić, T., McLaughlin, D., Cohn, S. E., and Verlaan, M.: Conservation of mass and preservation of positivity with ensemble-type Kalman filter algorithms, Mon. Weather Rev., 142, 755–773, 2014. a

LaCasce, J.: On turbulence and normal modes in a basin, J. Mar. Res., 60, 431–460, 2002. a

Longuet-Higgins, H. C.: Planetary waves on a rotating sphere, P. Roy. Soc. A-Math. Phy., 279, 446–473, 1964. a

Luther, D. S.: Evidence of a 4–6 day barotropic, planetary oscillation of the Pacific Ocean, J. Phys. Ocean., 12, 644–657, 1982. a

Marchal, O. and Zhao, N.: On the Estimation of Deep Atlantic Ventilation from Fossil Radiocarbon Records, Part II: (In) consistency with Modern Estimates, J. Phys. Ocean., 51, 2681–2704, 2021. a

McCuskey, S.: An Introduction to Advanced Dynamics, Addison-Wesley, Reading, Mass., ISBN 0201045605, 1959. a

Morse, P. and Feshbach, H.: Methods of Theoretical Physics, McGraw-Hill, New York, ISBN 10 007043316X, 1953. a

Pedlosky, J.: A study of the time dependent ocean circulation, J. Atmos. Sci., 22, 267–272, 1965. a, b, c, d

Ponte, R. M.: Nonequilibrium response of the global ocean to the 5-day Rossby–Haurwitz wave in atmospheric surface pressure, J. Phys. Ocean., 27, 2158–2168, 1997. a

Schreier, P. J. and Scharf, L. L.: Statistical signal processing of complex-valued data: the theory of improper and noncircular signals, Cambridge university press, ISBN 1139487620, 2010. a

Sewell, M. J., Crighton, C., et al.: Maximum and minimum principles: a unified approach with applications, Vol. 1, CUP Archive, ISBN 0521332443, 1987. a

Sheil, J. and O'Muircheartaigh, I.: Algorithm AS 106: The distribution of non-negative quadratic forms in normal variables, J. R. Stat. Soc., 26, 92–98, 1977. a

Stammer, D., Wunsch, C., Giering, R., Eckert, C., Heimbach, P., Marotzke, J., Adcroft, A., Hill, C., and Marshall, J.: Global ocean circulation during 1992–1997, estimated from ocean observations and a general circulation model, J. Geophys. Res.-Oceans, 107, https://doi.org/10.1029/2001JC000888, 2002. a, b, c, d

Stammer, D., Balmaseda, M., Heimbach, P., Köhl, A., and Weaver, A.: Ocean data assimilation in support of climate applications: status and perspectives, Annu. Rev. Mar. Sci., 8, 491–518, 2016. a

Stengel, R. F.: Stochastic optimal control: theory and application, John Wiley & Sons, Inc., ISBN 10 0471864625, 1986. a

Stommel, H.: The westward intensification of wind-driven ocean currents, EOS T. Am. Geophys. Un., 29, 202–206, 1948. a

Strang, G.: Computational science and engineering, Vol. 791, Wellesley-Cambridge Press Wellesley, ISBN-10 0961408812, 2007. a

Thomson, R. E. and Fine, I. V.: Revisiting the ocean’s nonisostatic response to 5-day atmospheric loading: New results based on global bottom pressure records and numerical modeling, J. Phys. Ocean., 51, 2845–2859, 2021. a

Thorne, P. and Vose, R.: Reanalyses suitable for characterizing long-term trends, B. Am. Meteorol. Soc., 91, 353–362, 2010. a

Woodworth, P., Windle, S., and Vassie, J.: Departures from the local inverse barometer model at periods of 5 days in the central South Atlantic, J. Geophys. Res.-Oceans, 100, 18281–18290, 1995. a

Wunsch, C.: Discrete inverse and state estimation problems: with geophysical fluid applications, Cambridge University Press, https://doi.org/10.1017/CBO9780511535949, 2006. a, b, c, d, e, f

Wunsch, C.: Is the ocean speeding up?, Ocean surface energy trends, J. Phys. Ocean., 50, 3205–3217, 2020. a

Wunsch, C. and Heimbach, P.: Practical global oceanic state estimation, Physica D, 230, 197–208, 2007. a, b

Wunsch, C. and Heimbach, P.: How long to oceanic tracer and proxy equilibrium?, Quat. Sci. Rev., 27, 637–651, 2008. a

- Abstract

- Introduction

- Notation and some generic inferences

- Example 1: mass–spring oscillators

- Example 2: barotropic Rossby waves

- Discussion and conclusions

- Appendix A: Notation and equations

- Appendix B: Green function analysis of estimates

- Code availability

- Data availability

- Author contributions

- Competing interests

- Disclaimer

- Acknowledgements

- Financial support

- Review statement

- References

climateincludes all sub-elements (ocean, atmosphere, ice, etc.). A common form of combination arises from sequential estimation theory, a methodology susceptible to a variety of errors that can accumulate through time for long records. Using two simple analogs, examples of these errors are identified and discussed, along with suggestions for accommodating them.

- Abstract

- Introduction

- Notation and some generic inferences

- Example 1: mass–spring oscillators

- Example 2: barotropic Rossby waves

- Discussion and conclusions

- Appendix A: Notation and equations

- Appendix B: Green function analysis of estimates

- Code availability

- Data availability

- Author contributions

- Competing interests

- Disclaimer

- Acknowledgements

- Financial support

- Review statement

- References